Last week I had the privilege of attending the 2025 Progress Conference, which brought together a diverse cadre of people working on progress, metascience, artificial intelligence, and related fields. I was surprised by how optimistic the median attendee was about AI for science. While some people inside science have been excited about the possibilities of AI for a while, I didn’t expect that representatives of frontier labs or think tanks would expect scientific progress to be the biggest near-term consequence of AI.

At this point it’s obvious that AI will affect science in many ways. To name just a few:

All of the above feel inevitable to me—even if all fundamental AI progress halted, it is virtually inevitable that we would see significant and impactful progress in each of these areas for years to come. Here I instead want to focus on the more speculative idea of “AI scientists,” or agentic AI systems capable of some independent and autonomous scientific exploration. After having a lot of conversations about “AI scientists” recently from people with very different backgrounds, I’ve developed some opinions on the field.

Note: while it’s standard practice to talk about AI models in personal terms, anthropomorphizing AI systems can allow writers to sneak in certain metaphysical assumptions. I suspect that any particular stance I take here will alienate some fraction of my audience, so I’m going to put the term “AI scientist” in quotes for the rest of this post to make it clear that I’m intentionally not taking a stance on the underlying metaphysics here. You can mentally replace “AI scientist” with “complex probabilistic scientific reasoning tool,” if you prefer, and it won’t impact any of my claims.

I’m also taking a permissive view of what it means to be a scientist here. To some people, being a scientist means that you’re independently capable of following the scientific method in pursuit of underlying curiosity-driven research. I don’t think this idealistic vision practically describes the bulk of what “science” actually looks like today, as I’ve written about before, and I think lots of people who have “Scientist” in their job titles are instead just doing regular optimization- or search-based work in technical areas. If calling these occupations “science” offends you or you think there’s additional metaphysical baggage associated with being a “scientist,” you’re welcome to mentally replace “AI scientist” with “AI research assistant,” “AI lab technician,” or “AI contract researcher” and it also won’t materially impact this post.

With those caveats out of the way, here’s seven ideas which I currently believe are true about “AI scientists.”

Early drafts of this post didn’t include this point, because after spending time in Silicon Valley I thought the potential of “AI scientists” was obvious enough to be axiomatic. But early readers’ responses reminded me that the East Coast is full of decels that different groups have very different intuitions about AI, so I think it’s worth dwelling on this point a little bit.

Some amount of skepticism about deploying “AI scientists” on real problems is warranted, I think. At a high level, we can partition scientific work into two categories:

One objection to “AI scientists” might go like this: current models like LLMs are unreliable, so we don’t want to rely on them for deductive work. If I want to do deductive work, I’d rather use software with “crystallized intelligence” that has hard-coded verifiable ways to get the correct answer; I don’t want my LLM to make up a new way to run ANOVA every time, for instance. LLMs might be useful for vibe-coding a downstream deductive workflow or for conducting exploratory data analysis, but the best solution for any deductive problem will probably not be an LLM.

But also, we hardly want to rely on LLMs for inductive work, since we can’t verify that their conclusions will be correct. Counting on LLMs to robustly get the right conclusions from data in a production setting feels like a dubious proposition—maybe the LLM can propose some conclusions from a paper or help scientists perform a literature search, but taking the human all the way out of the loop seems dangerous. So if the LLMs can’t do induction and they can’t do deduction, what’s the point?

I think this objection makes a lot of sense, but I think there’s still a role for AI models today in science. Many scientific problems combine some amount of open-ended creativity with subsequent data-driven testing—consider, for instance, the drug- and materials-discovery workflows I outlined in my previous essay about how “workflows are the new models.” Hypothesis creation requires creativity, but testing hypotheses requires much less creativity and provides an objective way to test the ideas. (There’s a good analogy to NP-complete problems in computer science: scientific ideas are often difficult to generate but simple to verify.)

Concretely, then, I think there’s a lot of ways in which even hallucination-prone “AI scientists” can meaningfully accelerate science. We can imagine creating task- and domain-specific agentic workflows in which models propose new candidates on the basis of literature and previous data and then test these models using deterministic means. (This is basically how a lot of human scientific projects work anyhow.) Even if “AI scientists” are 5x or 10x worse than humans at proposing new candidates, this can still be very useful so long as the cost of trying new ideas is relatively low: I would rather have an “AI scientist” run overnight and virtually screen thousands of potential catalysts than have to manually design a dozen catalysts myself.

(All of these considerations change if the cost of trying new ideas becomes very high, which is why questions about lab automation become so important to ambitious “AI scientist” efforts. More on this later in the essay.)

Viewed through this framework, “AI scientists” are virtually guaranteed to be useful so long as they’re able to generate useful hypotheses in complex regimes with more-than-zero accuracy. While this might not have been true a few models ago, there’s good evidence emerging that it’s definitely true now: I liked this paper from Nicholas Runcie, but there are examples from all over the sciences showing that modern LLMs are actually pretty decent at scientific reasoning and getting better. Which brings us to our next point…

A few years ago, “AI scientists” and “autonomous labs” were considered highly speculative ideas outside certain circles. Now, we’ve seen a massive amount of capital deployed towards building what are essentially “AI scientists”: Lila Sciences and Periodic Labs have together raised almost a billion dollars in venture funding, while a host of other companies have raised smaller-but-still-substantial rounds in service of similar goals: Potato, Cusp, Radical AI, and Orbital Materials all come to mind.

Frontier research labs are also working towards this goal: Microsoft recently announced the Microsoft Discovery platform, while OpenAI has been scaling out their scientific team. (There are also non-profits like FutureHouse working towards building AI scientists.) All of this activity speaks to a strong revealed preference that short-term progress in this field is very likely (although, of course, these preferences may be wrong). If you’re skeptical of these forward-looking signs, though, there’s a lot of agentic science that’s already happening right now in the real world.

A few weeks ago, Benchling (AFAIK the largest pre-clinical life-science software company) released Benchling AI, an agentic scientific tool that can independently search through company data, conduct analysis, and run simulations. While Benchling isn’t explicitly framing this as an “AI scientist,” that’s essentially what this is—and the fact that a large software company feels confident enough to deploy this to production across hundreds of biotech and pharma companies should be a strong sign that “AI scientists” are here to stay.

Benchling’s not the only company with “AI scientists” in production. Rush, Tamarind Bio, and others have built “AI scientists” for drug discovery, while Orbital Materials seems to use an internal agentic AI system for running materials simulations. There are also a lot of academic projects around agentic science, like El Agente, MDCrow, and ChemGraph.

There are a lot of reasonable criticisms one can make here about branding and venture-funded hype cycles, what constitutes independent scientific exploration vs. mere pattern matching, and so on & so forth. And it’s possible that we won’t see many fundamental advances here—perhaps AI models are reaching some performance ceiling and will be capable of only minor tool use and problem solving, not the loftier visions of frontier labs. But even skeptics will find it virtually impossible to escape the conclusion that AI systems that are capable of non-trivial independent and autonomous scientific exploration are already being deployed towards real use cases, and I think this is unlikely to go away moving forward.

When I started my undergraduate degree, I recorded NMR spectra manually by inserting a sample into the spectrometer and fiddling with cables, shims, and knobs on the bottom of the machine. By the time I finished my PhD, we had switched over to fully automated NMR systems—you put your sample in a little holder, entered the number of the holder and the experiment that you wanted to be run, and walked away. In theory, this could mean that we didn’t have to spend as much time on our research; in practice, we all just ended up running way more NMR experiments since it was so easy.

This anecdote illustrates an important point: as increasing automation happens, the role of human scientists just smoothly shifts to accommodate higher- and higher-level tasks. One generation’s agent becomes the next generation’s tool. I expect that the advent of “AI scientists” will mean that humans can reposition themselves to work on more and more abstract tasks and ignore a growing amount of the underlying minutiae—the work of scientists will change, but it won’t go away.

This change is not only good but necessary for continued scientific progress. I’ve written before about how data are getting cheaper: across a wide variety of metrics, the cost per data point is falling and the amount of data needed to do cutting-edge science is increasing concomitantly. Viewed from this perspective, “AI scientists” may be the only way that we’re able to continue to cope with the complexity of modern scientific research.

As the scope of research grows ever broader, we will need the leverage that “AI scientists” can give us to continue pursuing ever-more impactful and ambitious scientific projects. With luck, we’ll look back on the days of manually writing input files or programming lab instruments and wonder how we ever got anything done back then. This is doubly true if we consider the leverage afforded by lab automation: automating routine experiments will allow humans to focus on developing new techniques, designing future campaigns, or deeply analyzing their data.

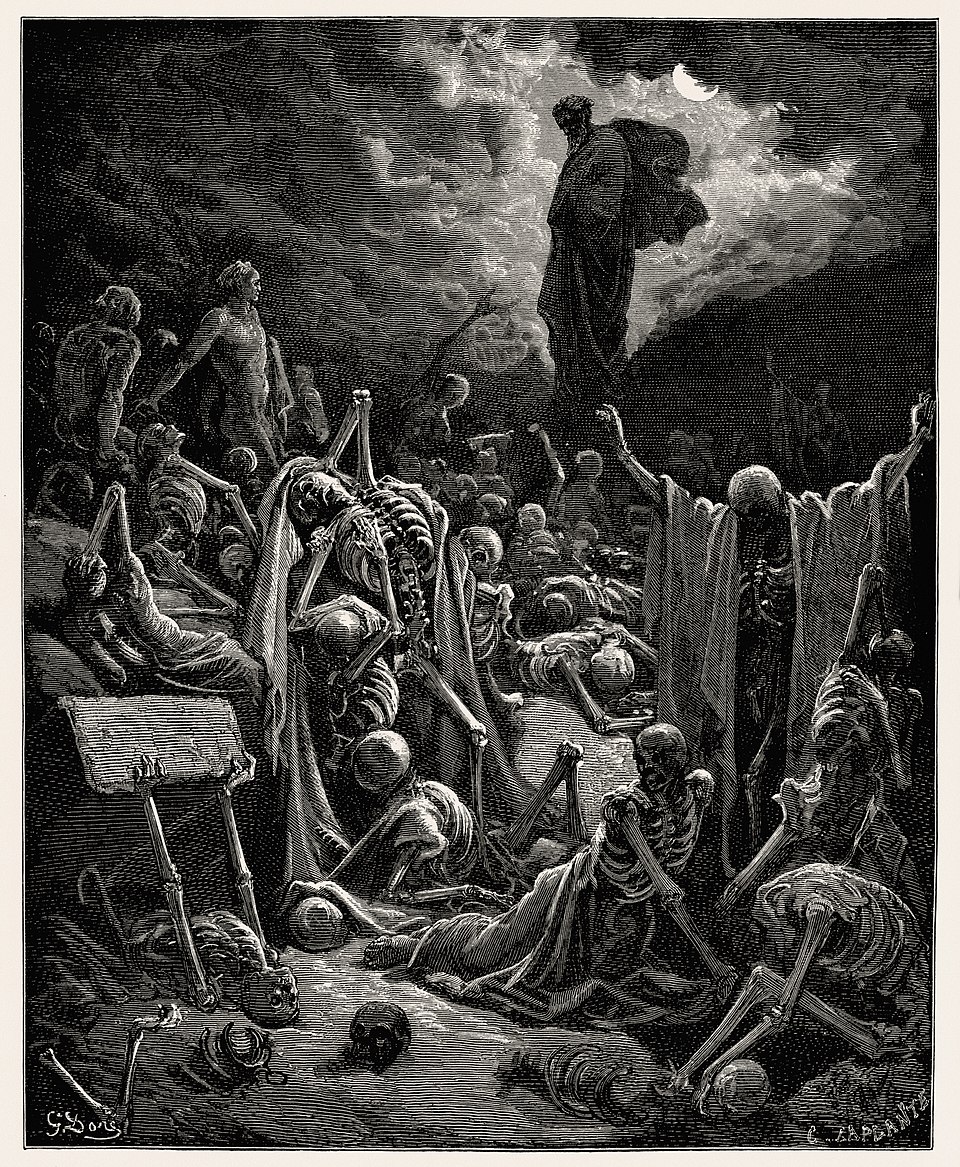

(A closely related idea is that of the “burden of knowledge”—as we learn more and more about the world, it becomes progressively more and more difficult for any one person to learn and maintain all this information. For the unfamiliar, Slate Star Codex has a particularly vivid illustration of this idea. There are several AI-related solutions to this problem: increased compartmentalization and abstraction through high-level tool use helps to loosely couple different domains of knowledge, while improved mechanisms for literature search and digestion make it easier to learn and retrieve necessary scientific knowledge.)

In Seeing Like A State, James Scott contrasts technical knowledge (techne) with what he calls metis (from Greek μητις), or practical knowledge. One of the unique characteristics of metis is that it’s almost impossible to translate into “book knowledge.” I’ll quote directly from Scott here (pp. 313 & 316):

Metis represents a wide array of practical skills and acquired intelligence in responding to a constantly changing natural and human environment.… Metis resists simplification into deductive principles which can successfully be transmitted through book learning, because the environments in which it is exercised are so complex and non-repeatable that formal procedures of rational decision making are impossible to apply.

My contention is that there’s a lot of metis in science, particularly in experimental science. More than almost any other field, science still adheres to a master–apprentice model of learning wherein practitioners build up skills over years of practice and careful study. While some of the knowledge acquired in a PhD is certainly techne, there’s a lot that’s metis as well. Ask any experimental scientist and they’ll tell you about the tricks, techniques, and troubleshooting that were key to any published project (and which almost never make it into the paper).

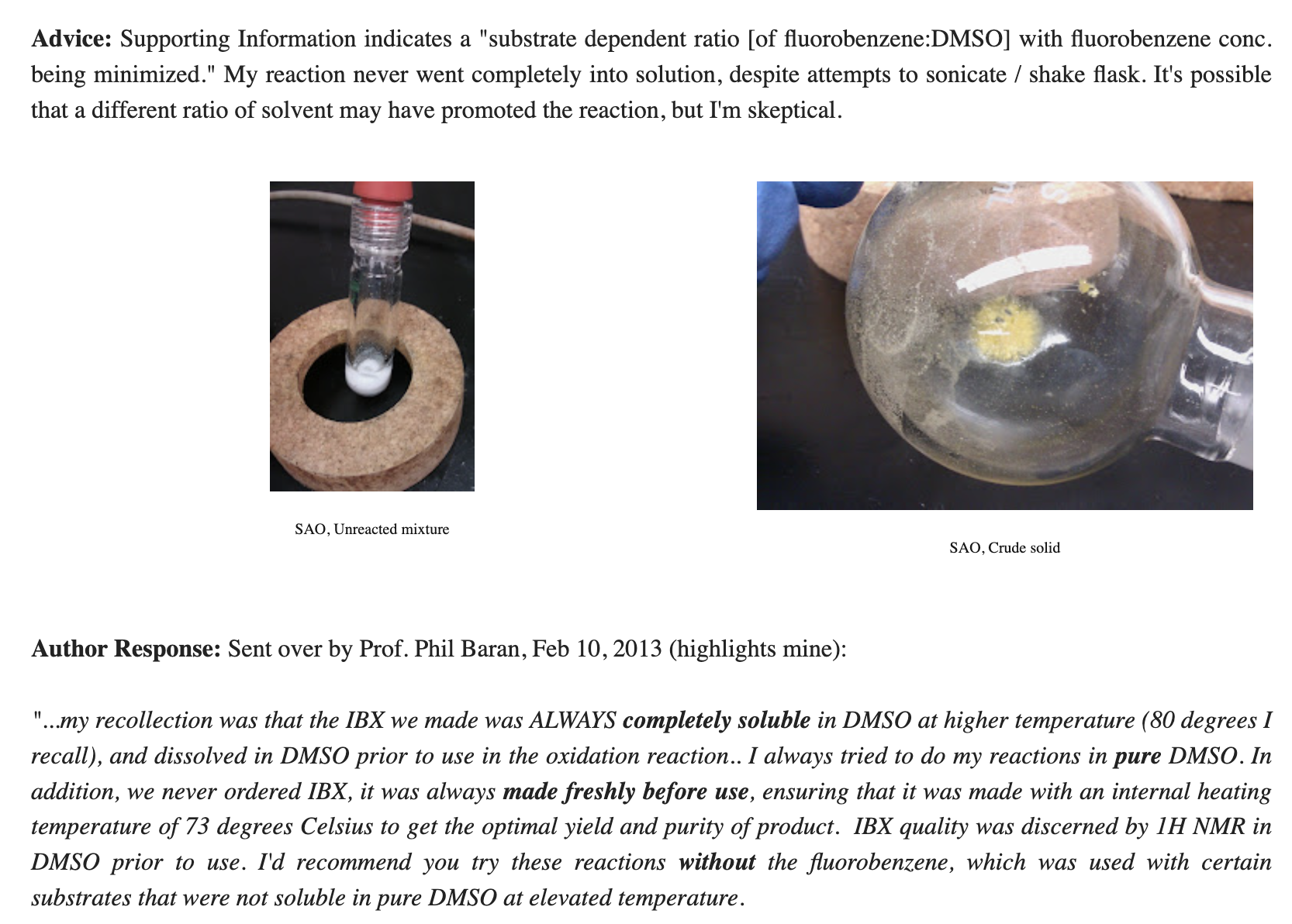

For non-scientists, the story of BlogSyn may help to illustrate what I mean. BlogSyn was a project in which professional organic chemists attempted to reproduce published reactions based only on the procedure actually written in the literature. On paper, this seems like it should work: one expert scientist reads a peer-reviewed publication with a detailed experimental procedure and reproduces a randomly chosen result. In two out of three cases, however, the authors were unable to get the reaction to work as reported without consulting the original authors. (The saga with Phil Baran lasted about a month and became a bit acrimonious: here’s part 1 and part 2.)

The moral of this story isn’t that the original authors were negligent, I think. Rather, it turns out that it’s really hard to actually convey all of the experience of laboratory science through the written word, in keeping with Scott’s points about metis. A genius scientist who’d read every research paper but never set foot in a lab would not be able to immediately become an effective researcher, but this is basically what we expect from lofty visions of “AI scientists.”

Accelerationist lab-automation advocates might argue that metis is basically “cope”: in other words, that technological improvements will obviate the need for fuzzy human skills just like mechanical looms and sewing machines removed the need for textile craftsmanship. There’s some truth to this: improvements in automation can certainly make it easier to run experiments. But robust automatable protocols always lag after the initial flexible period of exploration. One first discovers the target by any means necessary and only later finds a way to make the procedure robust and reliable. “AI scientists” will need to be able to handle the ambiguity and complexity of novel experiments to make the biggest discoveries, and this will be hard.

Humans can help, and I think that keeping humans somewhere in the loop will go a long way towards addressing these issues. The vast majority of AI-based scientific successes to date have implicitly relied on some sort of metis somewhere in the stack: if you’re training an AI-based reaction-prediction model on high-throughput data collected autonomously, the original reaction was still developed through the hard-earned intuition of human scientists. In fact, one can imagine in analogy to Amdahl’s Law that automation would vastly increase the returns to metis rather than eliminating it.

Given all these considerations, I expect that building fully self-driving labs will be much harder than most people think. The human tradition of scientific knowledge is powerful and ought not to be lightly discarded. I don’t think it’s a coincidence that the areas where the biggest “AI scientist” successes have happened to date—math, CS, and computational science—are substantially more textually legible than experimental science, and I think forecasting success from these fields into self-driving labs is likely to prove misleading.

(Issues of metis aren’t confined to experimental science—the “scientific taste” about certain ideas that researchers develop is also a form of metis that at present seems difficult to convey to LLMs. But I think it’s easier to imagine solving these issues than the corresponding experimental problems.)

In a previous post on this blog, I wrote about using ChatGPT to play GeoGuessr—there, I found that o3 would quietly solve complicated trigonometric equations under the hood to predict latitude using Python. o3 is a pretty smart model, and it’s possible that it could do the math itself just by thinking about it, but instead it uses a calculator to do quantitative reasoning (the same way that I would). More generally, LLMs seem to mirror a lot of human behavior in how they interact with data: they’re great at reading and remembering facts, but they’re not natively able to do complex high-dimensional reasoning problems just by “thinking about them” really hard.

Human scientists solve this problem by using tools. If I want to figure out the correlation coefficient for a given linear fit or the barrier height for a reaction, I don’t try to solve it in my head—instead, I use some sort of task-specific external tool, get an answer, and then think about the answer. The conclusion here is obvious: if we want “AI scientists” to be any good, we need to give them the same tools that we’d give human scientists.

Many people are surprised by this claim, thinking instead that superintelligent “AI scientists” will automatically rebuild the entire ecosystem of scientific tools from scratch. Having tried to vibe-code a fair number of scientific tools myself, I’m not optimistic. We don’t ask coding agents to write their own databases or web servers from scratch, and we shouldn’t ask “AI scientists” to write their own DFT code or MD engines from scratch either.

More abstractly, there’s an important and natural partition between deterministic simulation tools and flexible agentic systems. Deterministic simulation tools have very different properties than LLMs—there’s almost always a “right answer,” meaning that the tools can be carefully benchmarked and tested before being embedded within a larger agentic system. Since science is complicated, this ability to partition responsibility and conduct component-level testing will be necessary for building robust systems.

Deterministic simulation tools also require fixed input and output data: they’re not able to handle the messy semi-structured data typical of real-world scientific problems, instead relying on the end user to convert this into a well-structured simulation task. Combining these tools with an LLM makes it possible to ask flexible scientific questions and get useful answers without being a simulation expert; the LLM can deploy the tools and figure out what to make of the outputs, reducing the work that the end scientist has to do.

Rowan, the company I co-founded in 2023, is an applied research company that focuses on building a design and simulation platform for drug discovery and materials science. We work to make it possible to use computation to accelerate real-world discovery problems—so we develop, benchmark, and deploy computational tools to scientific teams with a focus on pragmatism and real-world impact.

When we started Rowan, we didn’t think much about “AI scientists”—I assumed that the end user of our platform would always be a human, and that building excellent ML-powered tools would be a way to “give scientists superpowers” and dramatically increase researcher productivity and the quality of their science. I still think this is true, and (as discussed above) I doubt that we’re going to get rid of human-in-the-loop science anytime soon.

But sometime over the last few months, I’ve realized that we’re building tools just as much for “AI scientists” as we are for human scientists. This follows naturally from the above conclusions: “AI scientists” are here, they’re going to be extremely important, and they’re going to need tools. Even more concretely, I expect that five years from now more calculations will be run on Rowan by “AI scientists” than human scientists. To be clear, I think that these “AI scientists” will still be piloted at some level by human scientists! But the object-level task of running actual calculations will be more often than not done by the “AI scientists,” or at least that’s the prediction.

How does “building for the ‘AI scientists’” differ from building for humans? Strangely, I don’t think the task is that different. Obviously, there are some trivial interface-related considerations: API construction matters more for “AI scientists,” visual display matters less, and so on & so forth. But at a core level, the task of the tool-builder is simple—to “cut reality at its joints” (following Plato), to find the natural information bottlenecks that create parsimonious and composable ways to model complex systems. Logical divisions between tools are intrinsic to the scientific field under study and do not depend on the end user.

This means that a good toolkit for humans will also be a good toolkit for “AI scientists,” and that we can practice building tools on humans. In some sense, one can see all of what we’re doing at Rowan as practice: we’re validating our scientific toolkit on thousands of human scientists to make sure it’s robust and effective before we hand it off to the “AI scientists.”

If logical divisions between tools are indeed intrinsic to scientific fields, then we should also expect the AI-driven process of science to be intelligible to humans. We can imagine a vision of scientific automation that ascends through progressively higher layers of abstraction. Prediction is hard, especially about the future, but I’ll take a stab at what this might look like specifically for chemistry.

At low levels, we have deterministic simulation tools powered by physics or ML working to predict the outcome and properties of specific physical states. This requires very little imagination: Rowan’s current product (and many others) act like this, and computational modeling and simulation tools are already deployed in virtually every modern drug- and materials-design company.

Above that, we can imagine “AI scientists” managing well-defined multi-parameter optimization campaigns. These agents can work to combine or orchestrate the underlying simulation tasks in pursuit of a well-defined goal, like a given target–product profile, while generating new candidates based on scientific intuition, previous data, and potentially human input. Importantly, the success or failure of these agents can objectively be assessed by tracking how various metrics change over time, making it easy for humans to supervise and verify the correctness of the results. Demos of agents like this are already here, but I think we’ll start to see these being improved and used more widely within the next few years.

Other “AI scientist” phenotypes could also be imagined—while progress in lab automation is difficult to forecast, it’s not hard to hope for a future in which a growing amount of routine lab work could be automated and overseen by “AI scientists” working to debug synthetic routes and verify compound identity. As discussed above, my timelines for this are considerably gloomier than for simulation-only agents owing to the metis issue, but it’s worth noting that even focused partial solutions here would be quite helpful. This “experimental AI scientist” would naturally complement the “computational AI scientist” described in the previous paragraph, even if considerable human guidance and supervision is needed.

A third category of low-level “AI scientist” is the “AI research assistant” that conducts data analysis and reads the literature. This is basically an enhancement of Deep Research, and I think some form of this is already available and will be quite useful within the next few years.

It’s easy to imagine a human controlling all three of the above tools, just like an experienced manager can deploy an army of lab techs and contracts towards a specific target. But why not ascend to an even higher layer of abstraction? We can imagine “AI project managers” that coordinate computational screening, experimentation, and literature search agents towards a specific high-level goal. These agents would be in charge of efficiently allocating resources between exploration and exploration on the basis of the scientific literature, simulated results, and previous experimental data—again, they could easily be steered by humans to improve their strategy or override prioritization.

This last layer of abstraction probably only makes sense if (1) the low-level abstractions become sufficiently robust and either (2) the cost of experimentation falls low enough that human supervision becomes a realistic bottleneck or (3) the underlying models become smart enough that they’re better at managing programs than humans are. Different people have very different intuitions about these questions, and I’m not going to try and solve them here—it’s possible that supervision at this level remains human forever, or it’s possible that GPT-6 is capable enough that you’d rather let the AI models manage day-to-day operations than a person. I would be surprised if “AI scientists” were operating at this level within the next five years, but I wouldn’t rule it out in the long term.

The overall vision might sound a little bit like science fiction, and at present it remains science fiction. But I like this recursively abstracted form of scientific futurism because it’s ambitious while preserving important properties like legibility, interpretability, and auditability. There are also tangible short-term goals associated with this vision: individual components can be tested and optimized independently and, over time, integrated into increasingly capable systems. We don’t need to wait around for GPT-6 to summon scientific breakthroughs “from the vasty deeps”—the early steps we take down this road will be useful even if true scientific superintelligence never arrives.

In the course of writing this piece, I realized that without trying I’d basically recapitulated Alice Maz’s vision for “AI-mediated human-interpretable abstracted democracy” articulated in her piece on governance ideology. Quoting Hieronym’s To The Stars, Maz suggests that an AI-mediated government should follow the procedure of human government where possible “so that interested citizens can always observe a process they understand rather than a set of uninterpretable utility‐optimization problems.” I think this is a good vision for science and the future of scientists, and it’s one I plan to work towards.

Just to make the implicit a bit more explicit: here at Rowan, we are very interested in working with companies building “AI scientists.” If you are working in drug discovery, materials science, or just general “AI for science” capabilities and you want to work with our team to deploy human-validated scientific tools on important problems, please reach out! We are already working with teams in this space and would love to meet you.

Thanks to many folks whom I cannot name for helpful discussions on these topics, and to Ari Wagen, Charles Yang, Spencer Schneider, and Taylor Wagen for editing drafts of this piece.